NUARI and Norwich Researchers Publish Paper Calling for Responsible Integration and Interpretability of AI-Generated Reports for Law Enforcement

Hot off the presses! We are happy to share an article authored by Norwich University and NUARI researchers for the Information Professionals Association.

Dr. Ali Al Bataineh, Director, Artificial Intelligence Center at Norwich University; Rachel Sickler, Senior Developer and Machine Learning Engineer, NUARI; Dr. W. Travis Morris, Director NU Peace and War Center, Director, School of Criminology and Criminal Justice and Dr. Kristen Pedersen Vice President and Chief Research Officer, NUARI, authored –

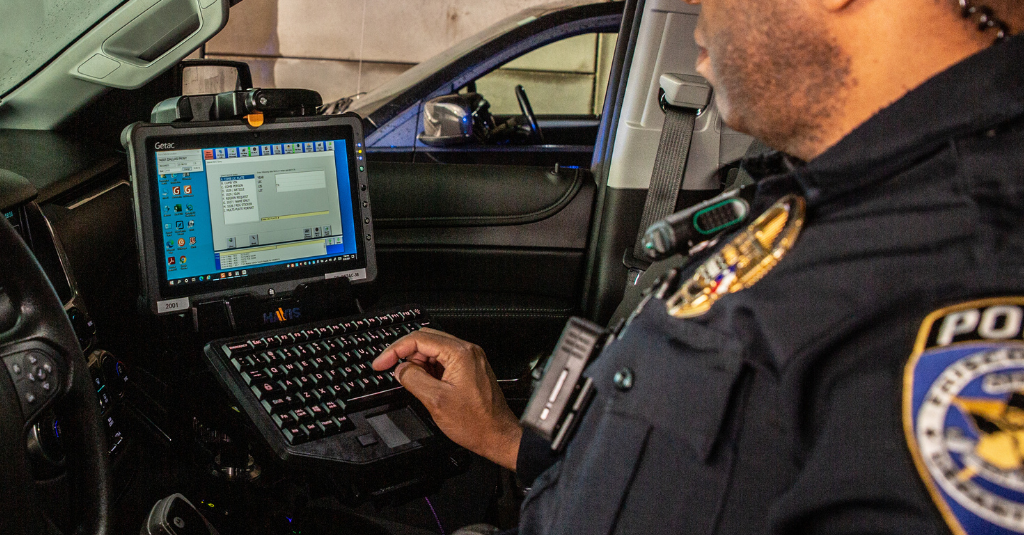

Recognizing that the advent of artificial intelligence (AI) has brought about significant changes across all sectors, including law enforcement (LE), the article advocates for responsible integration of AI in the law enforcement sector and that AI-generated reports should be included in the list of technological developments that require responsible integration and interpretability.

The authors outline the challenges of using AI-generated reports in law enforcement and the need for careful implementation and evaluation. They also discuss ethical considerations, best practices for AI implementation, and future research directions.

The full article can be found on IPA’s website and expands into greater detail on the main topic of AI-generated reports, but we’d like to highlight the following section from the article:

AI and the Future of Law Enforcement

To ensure ethical, accurate, and transparent AI implementation, these LE best practices should be followed. There are five necessary elements to successfully integrating the power of AI into LE in general and into the creation and use of AI-generated reports more specifically. We recommend the following:

Cross-Disciplinary Collaboration: Partnerships between AI developers, law enforcement professionals, policymakers, and social scientists to advance the understanding of AI opportunities and challenges, while serving the public good.

Public-Private Synergy: Building collaboratives between law enforcement agencies, community organizations, and private AI developers to develop and implement innovative AI tools tailored to LE needs and constraints. These partnerships would promote transparency, accountability, and trust in the validity and neutrality of AI-generated reports.

Continuous Training and Education: As AI becomes more integrated into law enforcement practices, it is essential to train law enforcement personnel to use AI-generated reports effectively and appropriately. This training should include instruction on the ethical implications, potential biases and misinformation, and limitations of AI-generated reports.

Adaptive Policymaking: Policymakers must remain vigilant and adaptive, updating AI regulations and guidelines as it evolves. This requires ongoing engagement with various stakeholders, including AI developers, law enforcement professionals, the court system, and community members, to identify emerging concerns and develop effective responses (Crawford and Calo 2016).

Public Awareness and Engagement: Public discussions are necessary for popular understanding of how LE uses AI. These discussions would ensure that the technology aligns with societal values and expectations, and that the process is explainable. LE must foster public trust and strive for transparency through awareness campaigns and other community dialogue.

The full article can be found on The Information Professional’s website.

Jakon Hays

Jakon is the Senior Marketing and Strategic Communications Specialist for Norwich University Applied Research Institutes (NUARI). He develops and executes digital and social media awareness initiatives promoting NUARI's mission of enabling a resilient society through rapid research, development, and education in cybersecurity, defense technologies, and information warfare.

More posts by Jakon Hays

You are now leaving NUARI - Norwich University Applied Research Institutes provides links to websites of other organizations for convenience and for informational purposes. A link does not constitute an endorsement of the content, viewpoint, policies, products, or services of that website. Once you link to another website not maintained by NUARI, you are subject to the terms and conditions of that website, including but not limited to its privacy policy.

You are now leaving NUARI - Norwich University Applied Research Institutes provides links to websites of other organizations for convenience and for informational purposes. A link does not constitute an endorsement of the content, viewpoint, policies, products, or services of that website. Once you link to another website not maintained by NUARI, you are subject to the terms and conditions of that website, including but not limited to its privacy policy.